Choosing the right number of testers for usability tests is crucial for maximising insights while minimising costs. Effective usability testing is essential in the design process, helping to identify potential issues that could hinder user experience. However, determining the optimal number of participants can be challenging, as it varies based on product complexity, user diversity, and specific study goals.

This article explores effective strategies for deciding participant numbers in usability studies, examining the widely accepted rules and contexts where adjustments may be necessary.

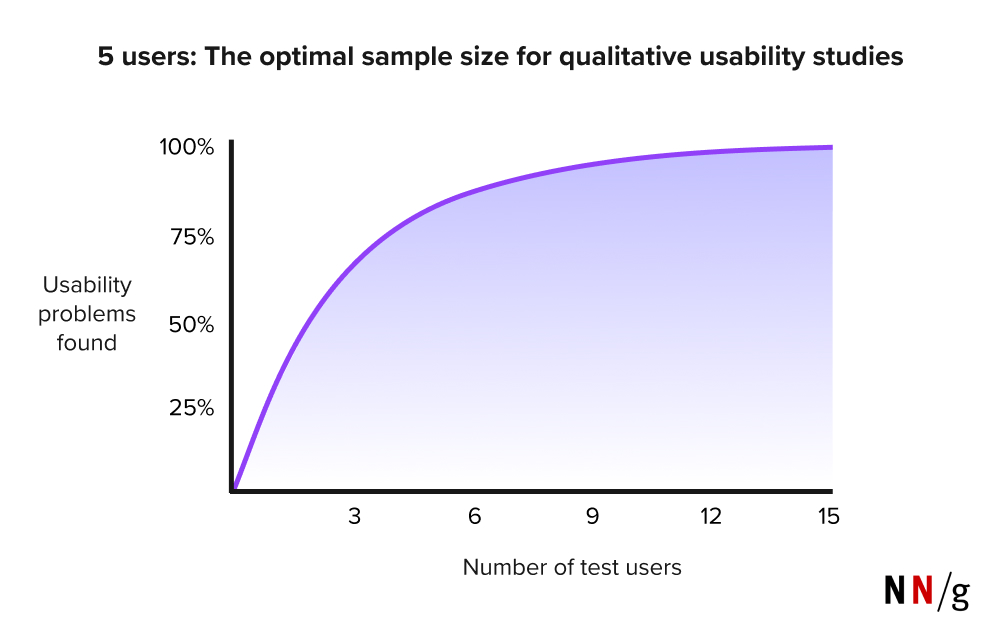

The 5-User Rule

The Nielsen Norman Group suggests that testing with just 5 participants can reveal nearly as many usability issues as larger groups. The rationale is that after 5 users, new insights decline significantly, making this approach both cost-effective and optimal. By distributing resources across multiple small tests instead of one large study, designers can iteratively improve usability and effectively address problems.

Research by Laura Faulkner explores this assumption further, showing how increasing the number of participants influences the detection rate of usability problems. In this study, 60 users were tested, and random samples of 5 or more participants were drawn from the full group. The goal was to highlight the risks of relying on only 5 participants and the advantages of including more. Some random sets of 5 participants identified up to 99% of the issues, while others uncovered just 55%. With 10 participants, the minimum percentage of issues found by any set rose to 80%, and with 20 participants, this increased to 95%.

There are scenarios where the 5-user rule may not apply:

- Complex products: E-commerce sites may require more participants (10-15) to uncover a greater percentage of usability issues.

- Diverse user groups: Products with multiple target segments require testing participants from each group, allowing for 3-4 users per segment if they are similar.

- Agile processes: In repeat testing environments, conducting multiple tests with 3 participants each can provide overlapping insights while saving costs.

- Quantitative data: For statistically significant quantitative results, around 40 participants are needed to ensure robust data.

The 40-User Rule

Different UX research methods need varying numbers of participants to achieve reliable results. Specifically, quantitative studies typically require a larger sample size. Here are some examples of recommended participant numbers for various quantitative methods:

- Heatmaps: It is advised to recruit 39 or more participants to ensure statistically significant results.

- Card sorting: A sample size of 20-30 participants is recommended.

- Tree testing: For this method, recruiting at least 50 participants is preferred to obtain meaningful insights.

In general, aiming for a sample size of approximately 40 participants is advisable for most quantitative usability studies, including surveys. Research from the Nielsen Norman Group indicates that this number allows for a 15% margin of error with a 95% level of confidence, making it a solid choice for ensuring the reliability of your findings.

For further details on quantitative sample sizes, refer to the Nielsen Norman Group’s article titled A Summary of Quantitative Sample Sizes, as well as the video below, which humorously explains the theory behind the 40-user rule through a study involving dog ears.

Conclusion

There is no universally correct number of participants for usability studies. Factors like product type, testing objectives and user diversity all influence the ideal participant count. While 5 participants often serve as a baseline for qualitative testing, and 40 for quantitative testing, it’s important to adjust your approach based on specific study needs and context.

Leveraging AI can also enhance the process, increasing the number of users you can report on by quickly generating testing questions and analysing data, thereby speeding up your usability testing efforts and improving the quality of user sentiment insights gathered.