OpenAI made a big mistake when they launched ChatGPT.

As a new user, by default, they would take your conversations, save them and use them to train future models.

What this meant was that data you put into ChatGPT could potentially re-emerge randomly as part of someone else’s conversation in the future.

They didn’t have to do this, but they did, and the result is that a large proportion of the corporate world are now fearful of using large language models to process their data.

This is hindering the uptake of AI across the corporate space.

The truth is that pretrained large language models like GPT-4o are actually incredibly safe. You just need to make sure you are using them in the right way.

I’m going to try and explain how these models work and why they are safe through the use of a domino based analogy.

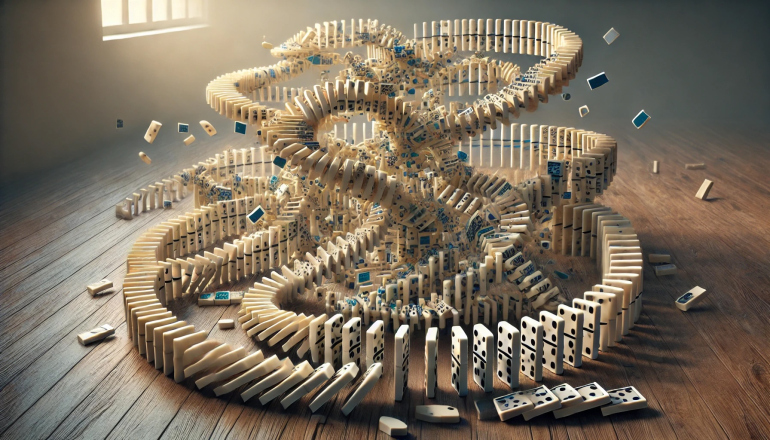

Rather than thinking of an AI model as a black box, in which “some things happen that could be bad”, think of it as a collection of standing dominoes, carefully positioned so that they fall in a very particular way.

The input to, and output of, the model is a row of dominoes. You choose what to input into the model by pushing over a selection of the “input” dominoes. This sends a cascade of falling dominoes through the model, ultimately producing an output at the end, which can be read by observing which of the “output” dominoes have fallen over.

Each domino represents a “parameter” of the model. The largest LLM models out there have hundreds billions of parameters, so this is a large set of dominoes, but it allows for some really complex and meaningful patterns to emerge as the dominoes cascade through the model.

Once the dominoes have fallen and you have read your output, you reset the model for the next set of inputs by setting the dominoes back to their original positions.

Two key features of this domino-based model are:

- It can only process one request at a time

- It has no memory of previous requests

These features ensure that the model doesn’t retain your data or mix it with other users’ queries. Once a query is processed, the model resets, preventing your data from influencing future responses.

Now let’s think about this in the context of real world LLM providers like OpenAI.

With ChatGPT, the underlying model behind the platform (GPT-4o currently), operates in the same way as my domino model. When you submit your query to ChatGPT, it gets sent to one of millions of GPT-4o model instances living in the cloud. This model processes your request then resets itself, ready for the next query. It’s fundamentally secure.

OpenAI choose to undermine this security by saving your input and your output, and using it to train future models. This is bad, as it means there is a small risk that your data could appear in future responses. The models they use for ChatGPT are also hosted in San Francisco, meaning that all of the data you send goes out of Europe, which isn’t great from a GDPR perspective.

However, if you access the OpenAI models via the Azure API, you can use models that are based in Europe, which don’t use your data to train future models. This addresses the two main security issues that exist in ChatGPT. AWS Bedrock also offer a wide range of safe, European-hosted models if you operate within a non-Microsoft stack.

Overall, whilst OpenAI’s approach to ChatGPT is not great, understanding how LLMs work can help alleviate the security fears associated with using an LLM to process your data.

If you are already using Azure or AWS to store or process your data, then using an LLM hosted within that platform will be no less secure than any of the other architecture that you are running on that platform, even if you are using one of the shared models.